The future has arrived. Algorithms control our cars and houses, airplanes, space stations, plants and factories. And it feels okay, right? No Rise of machines by 2022. It even feels nice, since any automation basically saves us, humans, from routine work.

The same applies to quality assurance and testing automation. Huge highly loaded products like Amazon marketplace or some fancy gaming platform are covered with automated testing scripts inside and out. Does the system cope with the load on Black Friday, is everything chill at the check-out – simple and complex scripts do a whole lot of great work for us.

Will automated testing replace manual QA engineers then? It does not look like it is possible or necessary today. Yet the collaboration of manual QA engineers and algorithms has been evolving for years now and it feels like it is going to bring more tempting opportunities in the future. We’ll elaborate in three statements.

01 Humans are incomparably better at understanding other humans than machines. Machines are incomparably faster and make fewer mistakes than humans.

When it comes to evaluating user experience as a whole, it is always manual QA engineers who do that. An algorithm can’t understand nor predict human motivations and emotions (or can it?), neither evaluate the aesthetics or the convenience of the interface design. An automated script can’t figure out what a human wants and cares about, and therefore why some solutions are performing and others are not.

A proper testing script rather helps though. An algorithm can’t get whether the design solves the problem or achieves the goal, yet it can check way faster and with fewer mistakes than humans whether the x and y coordinates change correctly on different interfaces.

02 Testing automation takes time and a solid budget. And it pays off greatly for some projects in the long run.

It doesn’t make sense to invest in a costly automated installation until you have a big project on the go. Big, rapidly growing and highly-loaded projects on the other hand will usually benefit from automation at some time.

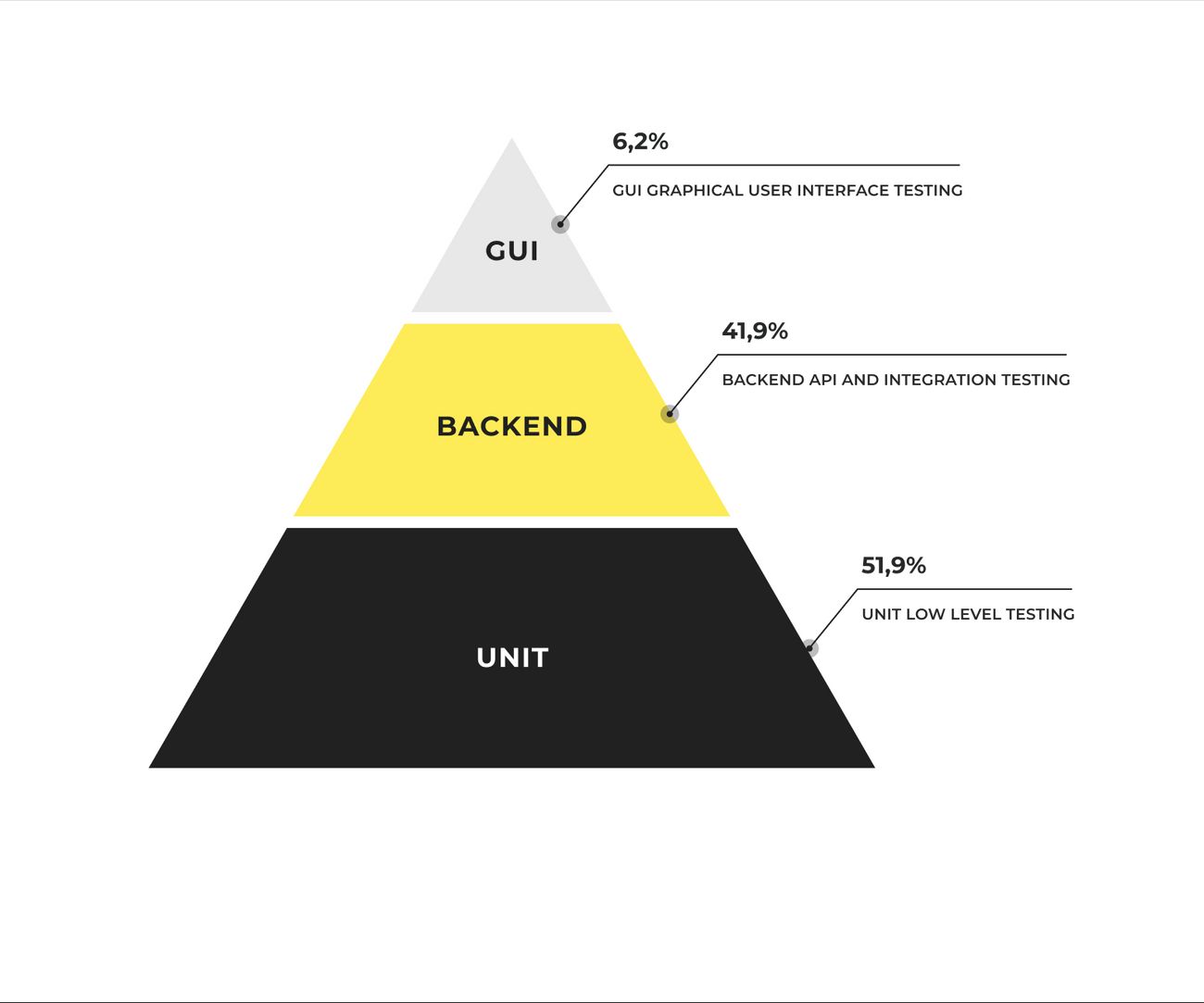

In a nutshell, automated test types depend on the level of abstraction. Using Mike Cohn's pyramid we can divide them into three groups, look:

Integration tests check how different systems interact with each other. E2E tests examine processes from the side of the user: is registration convenient, is log-in smooth? There are also unit tests, which check the operation of a small part of the code. Developers regularly update, add features, refactor code and make changes. Testing the entire system after each update is rather tedious and inefficient. Therefore, updated or corrected parts of the code are run through unit tests.

In a best-case scenario, the parts of the code with a critical impact on product performance are usually covered with all of the types of scripts – mostly E2E and unit tests, and the hybrid of them. However, most of the product projects nowadays do not follow best practices and sacrifice quality, covering code with fewer rather than fully required tests. We are not here to blame though, since modern businesses operate in a fast-paced environment and it is often vital to launch faster rather than flawlessly.

03 Some things can’t be automated. Human QA can’t test everything.

As wonderful as 100% automation sounds, it’s not feasible in practice and in the long run. There will always be some sections of the system where human scrutiny will be necessary and essential. Firstly, it is a human who creates an algorithm itself. Secondly, it’s unlikely that automation will ever be able to produce error-free results, and someone has to clean up after the robots’ mistakes.

There are complex processes we can’t automate, such as activity-tracking applications. These applications are engineered with many integrated systems that encompass a plethora of different components, all of which talk to each other in real-time (printers, monitors, software, APIs, cloud services, etc.). Automated scripts, however sophisticated, can’t test all dissimilar components at once. Plus, regardless of what these applications track – exercise movements or space utilization – automated tools can’t imitate human movements with enough precision to justify their use.

Testing the load on the system is always automated. What is the point of hiring hundreds of manual QA’s when a simple script can do that, right?

How will quality assurance change in the future?

Algorithms will certainly evolve and take more jobs from human’s shoulders, yet humans will inevitably be there: either testing manually those parts algorithms can’t cope with or coding automated testing scripts and controlling their performance.

Since human-algorithm collaboration seems to develop and strengthen, job description for conscientious QA engineer might change and require some coding experience. However, the core competencies will most likely remain the same, focusing on curiosity, thoroughness and effective communication with a flair for listening and diplomacy.